Why Poor Data Foundations Stall Enterprise AI and what you can do about it.

If you’re leading AI initiatives inside a modern enterprise, in the CTO, CDO, or CIO organizations, then you’ve likely hit the same wall that every forward-leaning company faces: your AI may not be failing because of the model. It might be failing because of the data.

The quality, structure, and completeness of your data, the very ground on which your AI strategy stands might be broken. And the cost of that broken foundation isn’t just technical debt. It’s innovation delayed. It’s trust eroded. It’s ROI that never materializes.

Most enterprise AI projects hit a wall early on — not due to lack of ambition or modeling capability, but because of:

Without clean, diverse, high-quality datasets, your teams cannot train, test, or trust the AI systems they’re building. And without benchmark-grade evaluation sets, you’re shipping blind.

This is where the failure hits hardest:

1. AI initiatives stall. Your best engineers are wasting cycles waiting for — or rebuilding evaluation and training datasets. Projects fall behind schedule.

2. AI projects fail to perform. You can’t evaluate against real-world edge cases because you don’t have them in your datasets. You can’t prove business value. You can’t guarantee safety.

3. Stakeholder trust collapses. Executives lose faith. Users complain. The model “sort of works” on one day and it doesn’t the other. You introduce risk into your product. Or worse, liability!

4. Budgets are burned on rework. You launch. It breaks. You need to go back and retrain. You relabel. You rewrite. You spend twice. And it is still challenging to ship faster.

There’s a better way forward and it doesn’t start with the model. It starts with the data. Here’s what you need to do:

Audit your data for quality, noise, and bias. You cannot fix what you don’t measure. Run evaluations and audits. Identify issues such as holes, edge cases, bad or missing information, noise, and unintentional stereotypical bias.

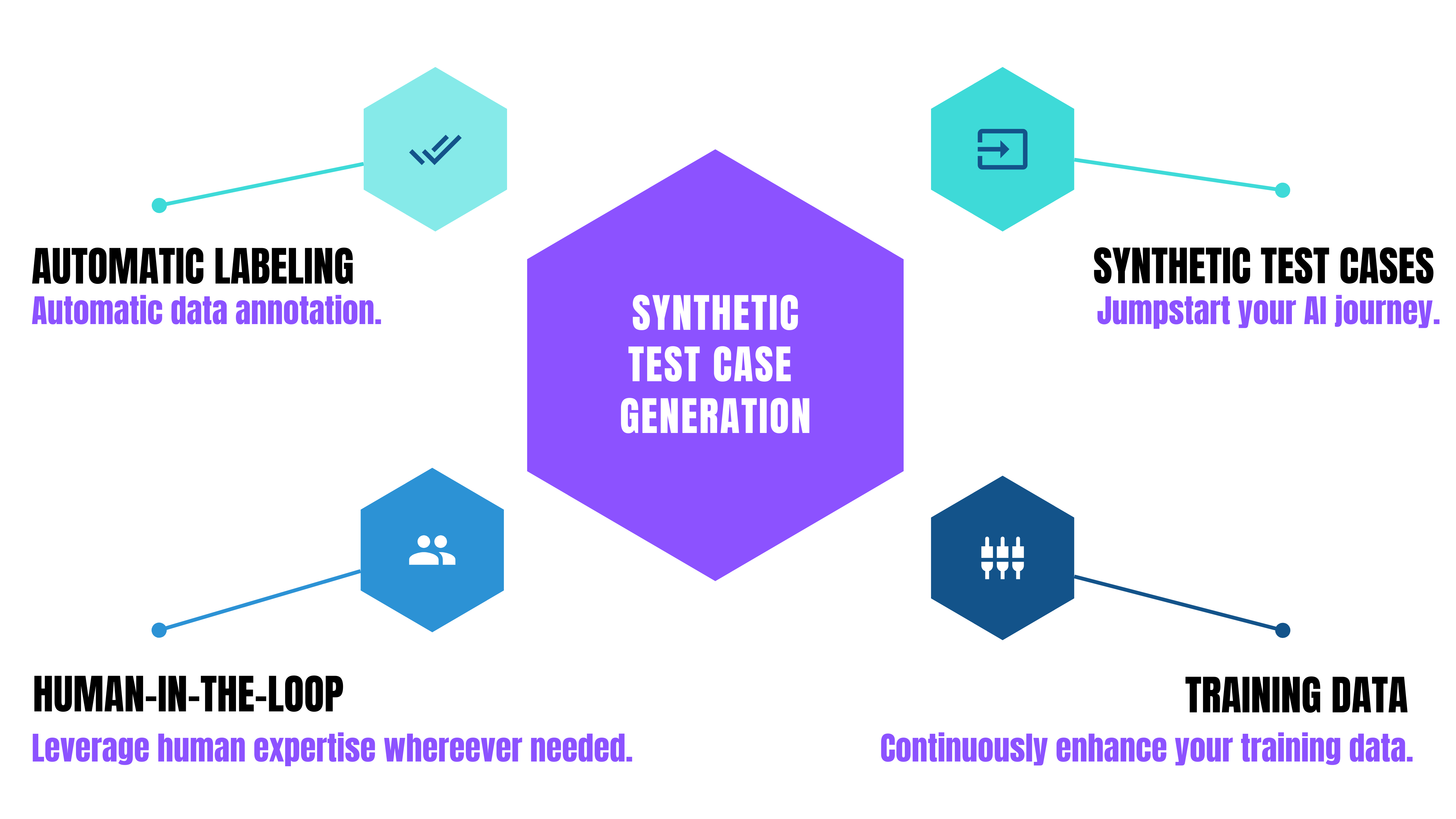

Invest in synthetic and human-annotated datasets. When your existing data isn’t good enough (and it often isn’t), generate what you need. Use realistic, representative, customizable synthetic data. Use machines and humans to validate and refine.

Define KPIs that match the real-world outcomes you care about. Accuracy isn’t enough. You need metrics like bias, adherence, relevance, hallucination, and more but tied to your specific use case, your users, and your risk appetite.

Standardize continuous evaluation and monitoring, not just experimentation. Ship models only after they’ve passed robust, repeatable, metric-driven evaluations and establish continuous monitoring for all AI Apps. Anything less is unacceptable.

AIMon enables enterprise teams to turn data from a blocker into a business enabler through its flagship products:

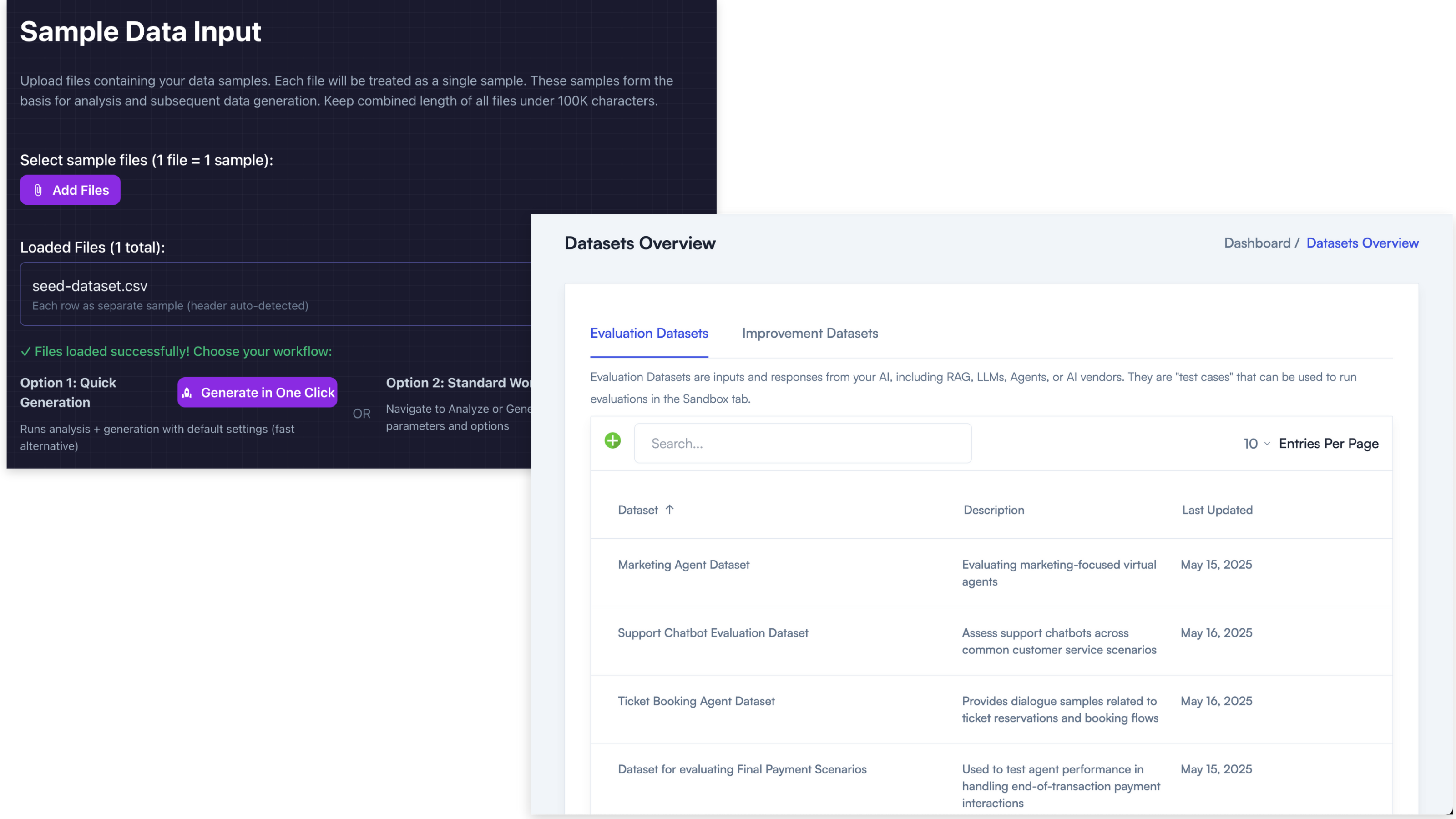

AIMon DataFoundry

Generates diverse, high-quality, production-grade synthetic data which can be optionally augmented by human annotation to ensure your models are tested and trained on realistic real-world datasets.

AIMon Guardrails - Data Quality and Safety

Delivers customizable metrics and evaluations for your specific domain. This helps track critical KPIs around the Enterprise data you use for AI projects, such as:

Guardrails also helps with evaluating your AI’s outputs, like factual accuracy, relevance, safety, and bias.

When you fix the foundation, everything changes.

You move faster. Your teams aren’t waiting for data. They’re building with it.

You reduce risk. You ship what’s been evaluated, not guessed.

You cut costs. No more rework. No more relabeling. No more rebuilds.

You deliver real value. You go from experimentation to production, and from proof-of-concept to product, with confidence.

If you take one thing from this, let it be this: Your model is only as good as the data you give it. If the data is flawed, everything built on top will be flawed. With AIMon, enterprises no longer need to choose between speed and safety. You can have both.

Backed by Bessemer Venture Partners, Tidal Ventures, and other notable angel investors, AIMon is the one platform enterprises need to drive success with AI. We help you build, deploy, and use AI applications with trust and confidence, serving customers including Fortune 200 companies.

Our benchmark-leading ML models support over 20 metrics out of the box and let you build custom metrics using plain English guidelines. With coverage spanning output quality, adversarial robustness, safety, data quality, and business-specific custom metrics, you can apply any metric as a low-latency guardrail, for continuous monitoring, or in offline evaluations.

Finally, we offer tools to help you iteratively improve your AI, including capabilities for real-world evaluation and benchmarking dataset creation, fine-tuning, and reranking.