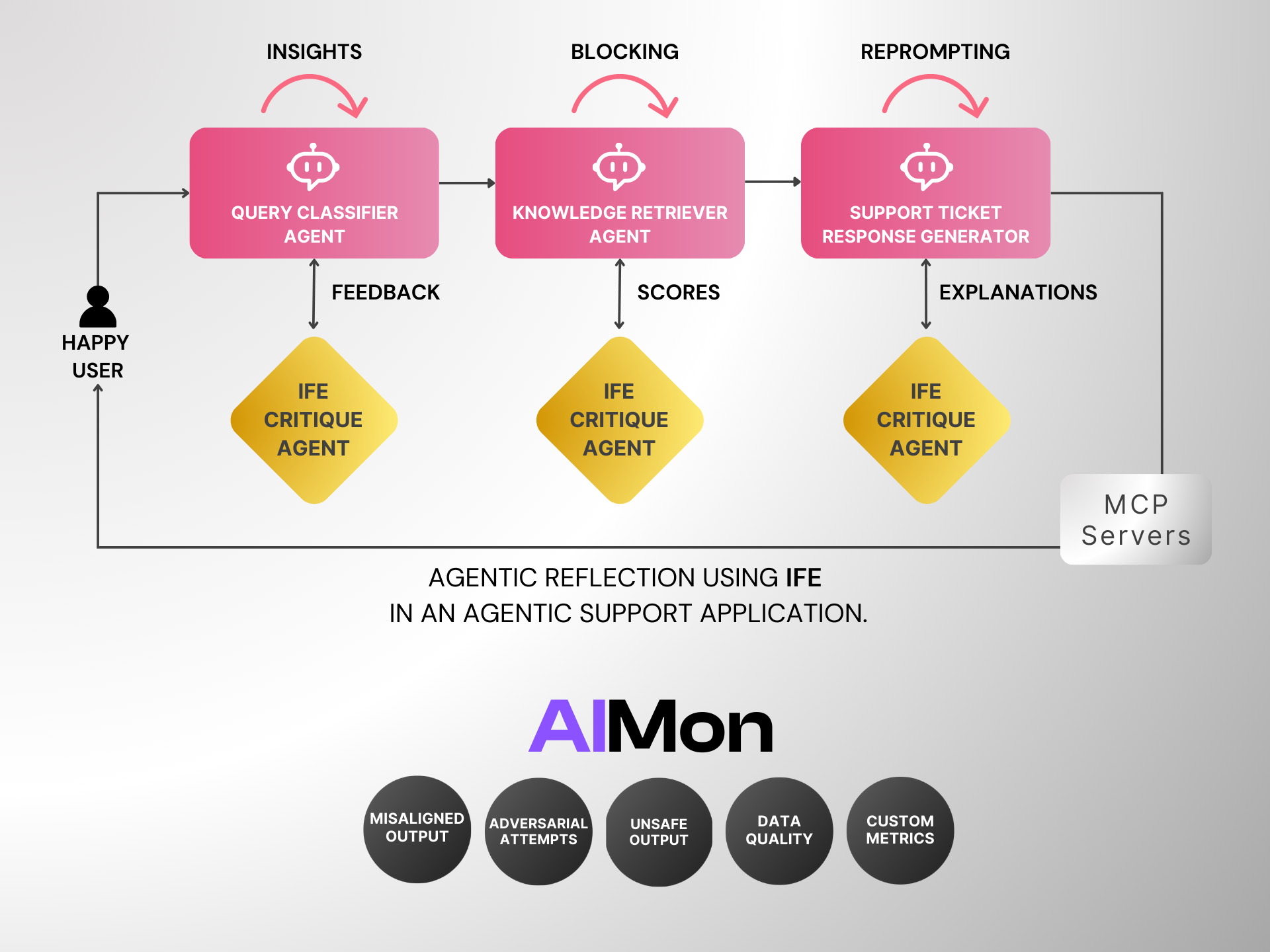

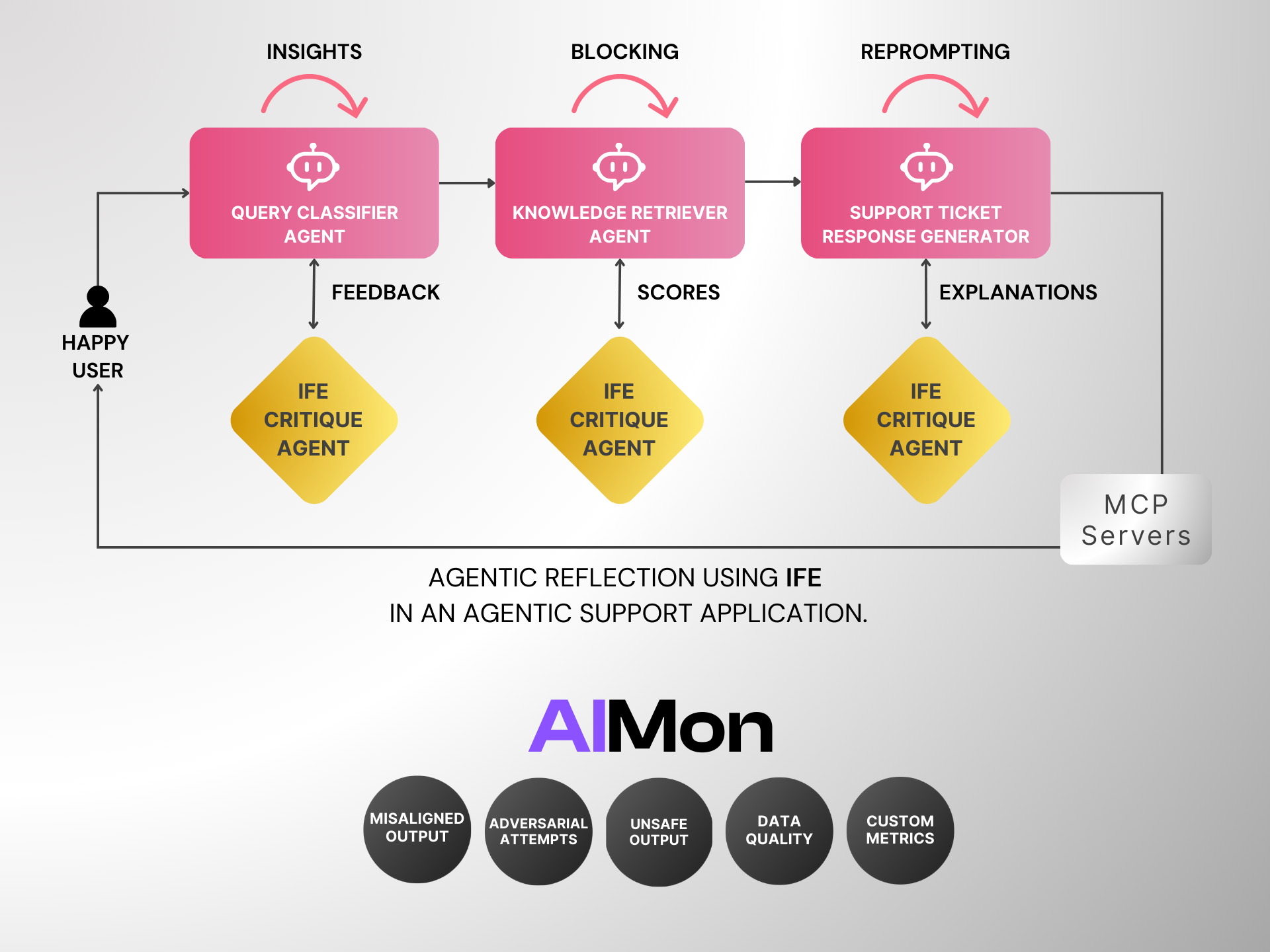

Introducing AIMon’s 200ms Instruction Following Evaluation (IFE) Model

Attain remarkably higher Agentic success rates with the powerful and fastest available instruction evaluation model.

Stay updated with the latest from AIMon.

Introducing AIMon’s 200ms Instruction Following Evaluation (IFE) Model

Attain remarkably higher Agentic success rates with the powerful and fastest available instruction evaluation model.

HDM-2: Advancing Hallucination Evaluation for Enterprise LLM Applications

An open-source 3B parameter model that can perform contextual and common knowledge hallucination checks in language model outputs.

Introducing RRE-1: Improving RAG relevance using Retrieval Evaluation and Re-ranking

RRE-1 helps developers easily evaluate retrieval performance and allows them to fix relevance issues by applying the learnings from the evaluation in the re-ranking phase - RRE-1 can be used as a low latency re-ranker via a convenient API.

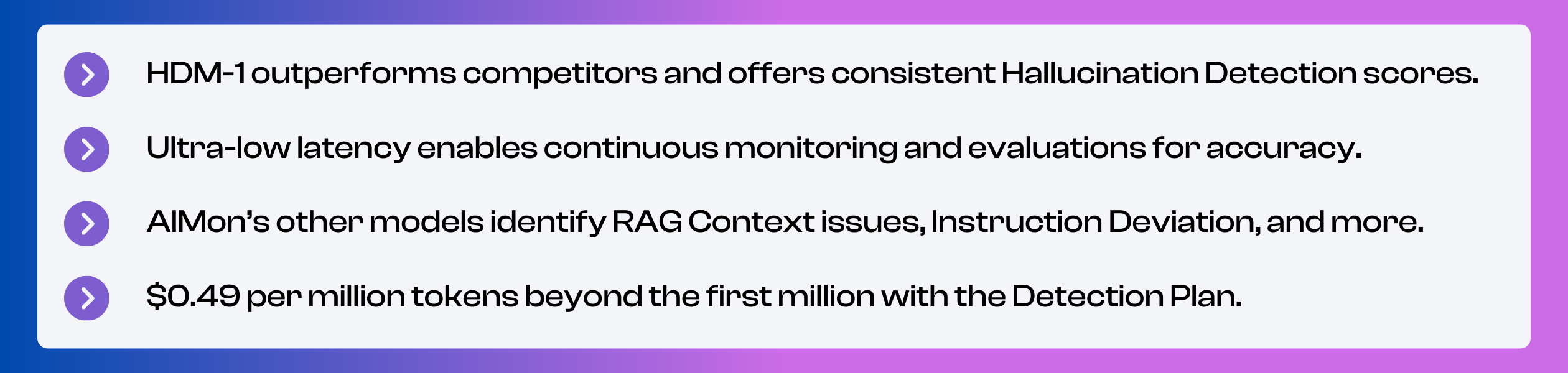

Introducing HDM-1: The Industry-Leading Hallucination Detection Model with Unrivaled Accuracy and Speed

HDM-1 delivers unmatched accuracy and real-time evaluations, setting a new standard for reliability in hallucination evaluations for open-book LLM applications.

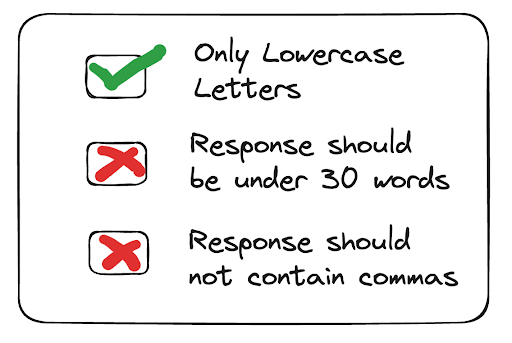

Announcing AIMon’s Instruction Adherence Evaluation for Large Language Models (LLMs)

Evaluation methods for whether an LLM follows a set of verifiable instructions.

From Wordy to Worthy: Increasing Textual Precision in LLMs

Detectors to check for completeness and conciseness of LLM outputs.