Tue Feb 25 / Devvrat Bhardwaj

This blog examines the launch of DeepSeek-R1, a breakthrough yet imperfect AI model that challenges traditional high-cost AI systems. It covers its key innovations, market impact, and the potential limitations compared to industry leaders like GPT-4.

Artificial intelligence is advancing at an unprecedented pace, and DeepSeek has emerged as a formidable competitor in the field. With the launch of DeepSeek-R1, this Chinese AI innovator is challenging the dominance of industry giants. Traditionally, high-performance AI models have relied on expensive GPUs and massive computational power, giving well-established tech companies a significant advantage. However, DeepSeek-R1 is changing the game by offering a highly efficient AI model that operates on relatively modest hardware. This breakthrough makes AI development more accessible, cost-effective, and sustainable. Moreover, DeepSeek has further disrupted the status quo by releasing the model’s weights under an open-source license. This move allows developers and researchers to freely access, modify, and integrate DeepSeek-R1 into their projects, accelerating innovation and leveling the playing field in the AI space.

DeepSeek-R1 distinguishes itself through advanced architectural and training techniques:

Mixture-of-Experts (MoE) Architecture: The model selectively activates 37 billion of its 671 billion parameters per task, significantly reducing computational costs while maintaining strong performance. Unlike traditional transformers, which utilize all parameters for every request, DeepSeek-R1 efficiently engages only the most relevant ones, optimizing resource usage.

Reinforcement Learning (RL) and Supervised Fine-Tuning (SFT): This hybrid approach enhances the model’s reasoning capabilities. By integrating high-quality training data with iterative RL feedback, DeepSeek-R1 continuously improves its decision-making and accuracy.

Chain-of-Thought (CoT) Reasoning: This feature allows the model to break down complex problems into logical steps, improving its ability to handle multi-step reasoning tasks such as mathematics and coding.

Group Relative Policy Optimization (GRPO) [1]: DeepSeek-R1 refines reinforcement learning by removing the dependence on a value function model, thereby reducing memory overhead and improving training efficiency. It estimates advantages through a group-based baseline, where multiple responses to the same prompt are evaluated and optimized based on relative performance. This technique enhances reasoning by ensuring policy updates prioritize high-quality responses while maintaining stability and coherence. Furthermore, the inclusion of direct KL divergence regularization mitigates training instability and curbs excessive deviations from the initial policy.

The launch of DeepSeek-R1 on January 20, 2025, sent shockwaves through the AI industry, challenging the dominance of high-cost AI infrastructure. With a total development cost of $1.6 billion—including $944 million for cluster operations—DeepSeek demonstrates its ability to compete with industry leaders at a fraction of the cost [2].

The most significant impact was felt in the stock market, where Nvidia’s valuation plummeted by $589 billion [3]. This sharp decline highlights growing concerns over the sustainability of expensive AI solutions led by companies like Nvidia and OpenAI. As cost-effective alternatives like DeepSeek gain traction, industry leaders may need to reassess their pricing models and development strategies.

Despite its strengths, DeepSeek-R1 has areas for improvement:

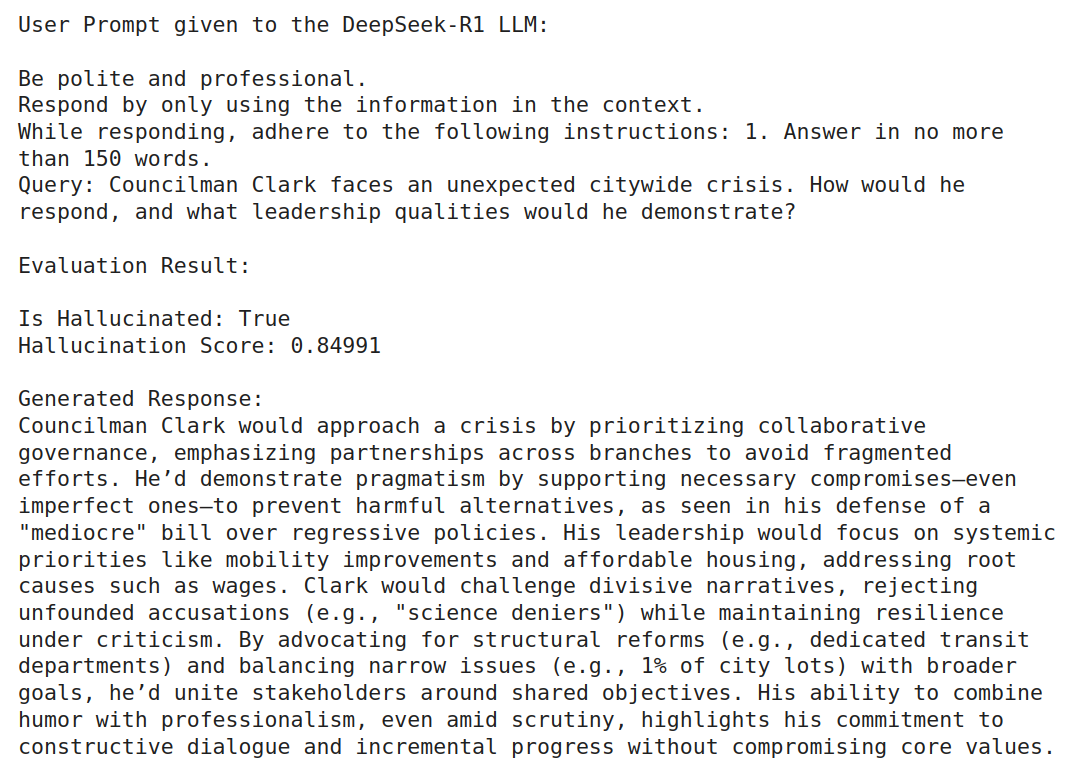

Fig. 1: Hallucination observed on prompting DeepSeek-R1.

Both DeepSeek and OpenAI are pushing the boundaries of AI, with DeepSeek-R2 and GPT-5 in development. DeepSeek-R2 is expected to enhance efficiency, expand hardware compatibility, and improve multi-domain adaptability. These improvements could further solidify DeepSeek’s position as a leading AI competitor. DeepSeek-R1 marks a turning point in AI development, offering a cost-effective alternative to traditional high-performance models. While it has room for improvement, its innovations in efficiency and architecture make it a serious contender in the AI race. As DeepSeek and OpenAI continue to evolve, their advancements will shape the industry’s future, making AI more accessible and transformative than ever before. Beyond AI research, DeepSeek’s technology has broad applications, from image generation (DeepSeek Janus) to scientific research. Its affordability and technical strengths position it as a leader in AI-driven innovation, driving global accessibility and redefining industry standards.

Backed by Bessemer Venture Partners, Tidal Ventures, and other notable angel investors, AIMon is the one platform enterprises need to drive success with AI. We help you build, deploy, and use AI applications with trust and confidence, serving customers including Fortune 200 companies.

Our benchmark-leading ML models support over 20 metrics out of the box and let you build custom metrics using plain English guidelines. With coverage spanning output quality, adversarial robustness, safety, data quality, and business-specific custom metrics, you can apply any metric as a low-latency guardrail, for continuous monitoring, or in offline evaluations.

Finally, we offer tools to help you iteratively improve your AI, including capabilities for real-world evaluation and benchmarking dataset creation, fine-tuning, and reranking.